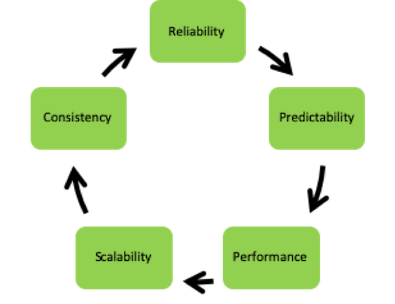

Software quality is often treated as an elusive and mysterious goal. Everybody wishes to have it and the strategies for achieving quality are as varied as the companies that claim it. Interestingly enough, very few people are able to provide a clear statement of what software quality is. IMHO this is the crux of the problem, how can a goal be achieved which isn’t clearly defined?

There are actually a multitude of software quality definitions that can be found. As so often, Wikipedia provides an interesting starting point for study. The pragmatic difficulty with many definitions of quality is that they are qualitative descriptions and are strongly dependent on the observer’s viewpoint. A pragmatically useful definition of software quality must be objective, reproducible and must deliver quantitative results.

However, the definition of quality alone is not sufficient. A strategy, describing how quality goals are to be achieved, is essential. When and how shall quality metrics be collected? How shall these quality metrics be used and presented to make the quality level visible and communicable? Quality goals must be defined in the form of target values for these metrics. Both long-term goals and short-term goals are needed to give the developers achievable targets that can be used to show progress. Finally, a plan must be put in place that will lead towards these goals.

One mechanism for determining the structural quality of the code is provided by the concept of static code analysis. Numerous competing tools are on the marketplace (e.g. PcLint, QaC, Polyspace, etc) for doing static code analysis. In fact, the concept of “static” code analysis is being strongly stretched by the capabilities that some tools bring to bear in predicting the run-time behavior of the analyzed code.

However, even the compiler can provide a significant level of support, should one choose to make full use of it. The gcc compiler provides the flags “-Wall -Werr” which IMHO should always be mandatory. The first flag will enable a large list of static checks that would otherwise be skipped. The second flag ensures that warnings will be treated as errors, forcing developers to take warnings seriously and eliminate them. Every compiler with which I am familiar has similar capabilities. I have often been shocked at the cavalier fashion in which many organisations seem to dismiss compiler warnings as “meaningless”.

Additional ideas for structural quality metrics can be gleaned from MISRA or from the HIS Source Code Metrics. MISRA plays a significant role in embedded C programming and is often required in security and/or safety relevant applications. The HIS metrics are a product of the automobile industry and do a surprisingly good job of quantifying structural quality attributes that are often considered difficult to quantify.

A starting point for determining the functional quality of the code is provided by ASPICE, CMMI and other development maturity models. A recurring theme of these models is the demand for fully defined functional requirements. These in turn must be traceable to qualification tests that are performed on the final system. Essentially, when a test is passed this can be accepted as proof that the associated functional requirements are satisfied. The degree to which functional requirements are traceable to qualification tests can therefore provide a metric for the functional quality of the final system. Of course, this works best if the requirements are complete and correct.

The first time that tools are used to generate quality metrics the results are likely to be disheartening. It is not uncommon for medium-large projects to see many thousands of errors from the static code analysis tools alone. Simply tossing this in front of the developer team with the demand that the problems be “fixed” will ruin morale and will not be productive. Two different tool configurations will be needed. The weak configuration will be the one in current use. The strong configuration represents the long-term quality goal of the organisation.

In the weak configuration enough error and warning messages will be suppressed so that the overall number of problems needing correction will be achievable within a short period of time. The second configuration reflects the level of quality that the organisation wishes to achieve. This will require that an engineer study the capabilities of the analysis tool and the meaning of the different tests that can be performed. This expert must then make an informed decision about the tests to be enabled.

When setting short term goals a time frame of 2 to 4 months between milestones is typically reasonable. Targets can be set by asking each developer to eliminate (e.g.) 50 warning- or error messages each week. It can and should be accepted that the metrics may sometimes stagnate for a while. It should never be accepted that the metrics deteriorate.

I consider it essential that the developers themselves are able to generate the quality metrics for the code for which they are responsible. This fosters an awareness of personal responsibility for the quality of the code that each developer delivers.

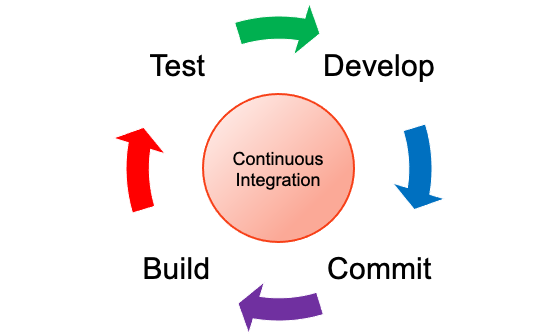

Regular informal results can be produced as part of the daily (or continuous) build. Formal results, which are recorded and used for planning and trend analysis, can be generated (e.g.) as part of the integration step, on a weekly basis or in any other fashion that can be painlessly integrated into the development process.

At the end of each 2 to 4 month improvement cycle a review is needed. Should the chosen definition of quality, as embodied in the choice of metrics, tools and configurations be adjusted? The weak configuration will need to be reviewed and strengthened to enable additional warnings and errors for the next improvement cycle. The short-term improvement goals for the developers will need to be reviewed and adjusted to ensure realistic and achievable expectations for the next cycle.

Quality metrics enable clear communication both to the management within the organisation and to customers. Periodic collection of metrics using the strong configuration will show progress towards the chosen long-term quality goals. The overall trend of fewer warnings is a message in and of itself. However, a comparison of the two configurations (weak and strong) can be easily used to provide a “percentage achievement” of the overall quality goals. This is done by setting the number of enabled tests in the weak configuration in proportion to the number of enabled tests in the strong configuration.

In summary, software quality need not be mysterious. Excellent concepts and powerful tools are available, the challenge is to choose the concepts and tools that will work best in your organisation. These can then be used to create a measurable, quantitative definition of quality. Once this is in place it becomes a question of strategy and management. Set the goals, define the strategy, make a plan and do the work, milestone for milestone.

What has not been discussed here (it deserves its own article) is how to integrate this into the overall development process without creating extra work for the developers. Suffice it to say that this too is a solvable problem.