It has been some time since I last added an article to this list. It has been a busy year and then Covid-19 came along and changed everybody’s plans. Please remain cautious and stay healthy!

Today I would like to revisit the concept of software re-use. Software re-use is something that everyone claims to strive for. But what counts as re-use and how can it be achieved? One of my consultation clients asked me to address this issue and to assemble some guidelines for software development which will encourage re-use. Those guidelines are reproduced here.

I apologize for the “text-only” format of this article, somehow none of the images I was able to imagine truly seemed to fit. As always, feedback and criticism is welcomed.

Definition of Re-Use

The ultimate goal of re-use is to be able to use source code across multiple projects without the need for code changes. Due to changes in the environment (OS/RTOS, underlying processor, etc) a need for recompilation can be expected. However, if code changes are needed, this is not re-use in the sense of this article.

The key question I would like to address is how to get there. What guiding principles must be observed to encourage the development of re-usable software?

Principle of the Minimum

Guideline 1: Never develop anything which is not needed

There is a tendency of many SW engineers to add functionality to their code to prepare for perceived future needs. Although this might seem farsighted, the result is that the final product contains code which is not used – dead code. Experience shows that such code is almost always inadequately tested. When and if it is ever needed, it is typically deeply flawed and doesn’t really quite fit into the required use case. This in turn results in the need to debug and/or rewrite the code.

The key point is that much of the effort invested in preparing for future needs is wasted. Since developer time is such a valuable and scarce resource, this simply doesn’t make sense. Don’t invest that effort until that capability is needed and then make sure that it works and fits the use case. If a future need is foreseen, try to ensure that it will be easy to add in when needed. But don’t actually develop it until it is needed.

Interface Principles

Guideline 2: An interface shall provide all interface users with mutual anonymity

Every interface has the purpose of supporting structured communication between two or more interface users. Ideally, the users will only see the interface. They should have no visibility of the communication partners on the other side of the interface.

Every detail that becomes known about the partner(s) will restrict software re-use. If a communication partner is changed for any reason, publicly visible details must be treated as invariant and must be preserved. If one of these invariant details is changed, the communication partners will also need to be adapted. This makes change, test and integration more difficult and contributes to increased cost in maintenance.

On the other hand, if the users of an interface are mutually anonymous, any needed changes can be introduced with impunity. As long as the interface itself is correctly used and/or provisioned, the other communication partners will neither know nor care.

Guideline 3: An interface shall hide the underlying data transport layer

In the IT world the Ethernet paradigm has been extremely successful as a data transport mechanism. The entire SOA concept was built upon the ability to extend this physical transportation mechanism to provide a logical interface abstraction. Many communication frameworks (e.g. DDS, CORBA, etc) assume the use of Ethernet. In this environment it would not be necessary to hide the physical transport layer so strictly.

For the embedded world the situation is more complex. A multitude of different operating systems with widely varying capabilities exist. Resources are scarcer. Reliable and truly deterministic real-time behavior is sometimes essential. The result of all of these considerations is that different systems can and do use different transport mechanisms. If the physical mechanism is not hidden, the interface and the components that use it cannot be ported to another system without requiring code changes. This is a tremendous handicap for SW re-use.

Guideline 4: An interface shall be independent of serialization

Again I refer to the world of IT and cloud computing in which serialization is nearly always done using a text-based format with the two clear leaders being JSON and XML. In the world of embedded development serialization using text-based formats has disadvantages. They require more computation and result in larger data packets which consume more communication bandwidth.

A simple binary packing format will have clear advantages for most embedded environments. However, this requires knowledge of binary data formats and will demand extra effort to resolve these issues. Are all communication partners little-/big-endian? Do they all use the same floating-point format (including the special cases such as NaN)? Is padding necessary to accommodate the programming language and/or processor architecture?

The key point is that the components using an interface should not see any of these details. From the component point of view, as an interface user, the serialization must be completely invisible.

Guideline 5: An interface shall be assembled from standardized basic idioms, each of which has a known behavior

On the one hand this seems trivial. An interface is composed of function calls and data objects. Every function call will have a signature and every data object will have a type. IMHO this is not enough. Different types of function calls can be identified. These function call patterns provide standardized basic idioms with which interfaces can be assembled.

Command functions: A command function is a fire-and-forget call sent by a client to a service provider, asking it to do something. The calling signature of the command function will depend on the command being invoked. A command call may be made at any time by any client which is connected to this interface. Depending on the underlying communication paradigm, the return value may be a meaningful response to the command or might only be an indication that the command was successfully sent.

Request-Response functions: A request-response function is actually a function pair. The calling signatures of these two functions will again depend on the request being made. The request is a function which may be called by a client, requesting something from a service provider. The reaction of the service provider to this request will end with a call to the response function with the arguments (if any) being used to transmit data back to the requester. The request-response function pair includes a specific expected behavior. It is forbidden for a single client to send a request twice in succession without waiting for the response in between. For the service provider it is expected that a single call to the response function will be the final action in reacting to a request call.

Broadcast functions: A broadcast function is also implemented as a function pair. The broadcast function is used by a service provider to send information to all “subscribers”. The paired function is a “subscribe” function which can be used by clients to register/cancel their subscription to this broadcast function.

Notification functions: Notification functions are similar to broadcast functions but are associated with the data objects that are part of the interface. For every data object a subscription and notification function will exist. The client can use the subscription function to register for notifications when a data object is updated. The notification function will be used by the service provider to inform the clients that the data object has been updated.

This is by no means an exhaustive list of possible interface idioms but suffices to show what is intended. Easy SW re-use demands that interfaces be assembled using agreed-upon idioms which will follow well-defined behaviour patterns. These idioms in turn must fit into an interfacing scheme that is supported by the underlying communication framework.

Guideline 6: Interfaces must be versioned

One of the most common reasons for integration failures is when interface changes are introduced. The most common scenario is that the interface provider introduces the change. When the change is properly communicated to all clients and all needed adaptations are properly coordinated, no problems will arise. This is an unfortunately rare occurrence. It is much more common that changes are not properly communicated or coordinated. The first developer to introduce changes kicks off a round of chaos in which the system no longer functions correctly. Depending on what was changed and how the interface is defined the problems may show up in the compilation, the link or at run-time.

If interfaces are versioned, then it becomes possible to check the version number of the interface against the expectations of the users. It becomes possible to detect version incompatibilities based on a formal comparison at the start of the build process. This saves a tremendous amount of time that would otherwise be otherwise lost chasing down the root cause of failures resulting from interface changes.

Interface version numbers only need two version levels – major and minor version numbers. The minor version number is incremented when an interface is expanded, e.g. by adding a new function or data object. Such an expansion will preserve compatibility with clients that expect the older interface version. The major version number is incremented when a change is made which will break compatibility, e.g. if a function is deleted, if the calling signature of a function is changed or if the type of a data element is changed.

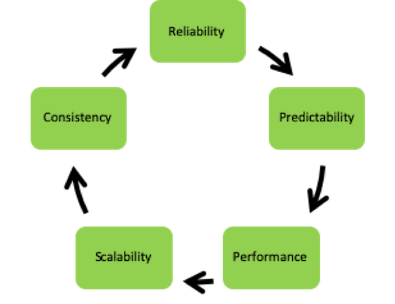

Properties of Re-Usable Components

Definition: A component is a software package which can be re-used without code changes

In many ways this is the holy grail of SW re-use. If code changes cannot be avoided, architecture erosion is the inevitable result. However, even in simple library routines (e.g. memcpy()) code changes may be needed when porting from one processor to another.

The challenge is to identify the invariants of a component and to separate them from the aspects that change. If a different processor, a different OS, a different project has some new combination of needs, adaptations will be unavoidable. However, if these adaptations can be encapsulated as trim parameters, initializations or by linking with specific library (e.g. an OS abstraction library) and maintained separately from the source code of the component, the potential for re-use is preserved.

Generally, recompilation is unavoidable when moving a component to a new environment. This creates the temptation to configure for the new environment using compiler flags. Please resist this temptation, it is a slippery slope leading quickly to coding hell.

Another popular choice is to use initialization values at startup time to turn some code branches on or off. This has the disadvantage of leaving “dead” code in the binary image with all of the resulting costs and dangers.

A better choice is to use the initialization to “insert” knowledge into the component. The sorting and formatting of a list of records for display provides an excellent example. If the component that manages a list receives a pointer to a format function and a pointer to a comparison operator as initialization input, all the rest of the handling of the list can remain invariant. The choice of (e.g.) bubble vs binary sort is easily changed, what is important is the use of the right comparison operator to get the order right.

Guideline 7: An “atomic” component is an encapsulation of some functionality which may only be accessed through formally defined interfaces (see the interface principles described above)

An atomic component is one that cannot reasonably be divided into smaller components. This is a qualitative judgement that is made by the responsible software designer or architect

The important part of this statement is the second half, in which the concept of encapsulation is mentioned together with the principle of access restriction. The interfaces provide the only access to the functionality encapsulated in the component. If any other access is available, this must be considered an additional interface. It must be formally described and versioned. This seems simple and self-evident. Unfortunately, it seems to require enormous discipline and is rarely enforced as an architectural guideline.

Guideline 8: When using a component, it is sufficient for the client to know its interfaces

This is related to the principle stated above that interfaces should provide mutual anonymity. And that components may only be accessed through their interfaces. If more than knowledge of the interface is necessary, anonymity is lost and re-use is impaired.

Guideline 9: Component dependencies must be known and documented

Ideally every component will include a description (a manifest) which includes a summary of its dependencies. Such dependencies will include (at least):

- system libraries

- infrastructure libraries and associated tooling (e.g. dedicated editors, code generators)

- interface versions (both imported[1] and exported[2] interfaces)

If dependencies are known and managed, it makes it possible to perform an automated verification as part of integration (prior to the build) to ensure that the formal pre-requisites for success are given. This greatly simplifies the job of integration by ensuring that certain classes of error are identified and corrected much earlier in the development flow.

Guideline 10: Components must be versioned, not just the individual source files

The finest granularity of re-use is at the component level. This is the level which requires versioning when delivering work products into the integration/delivery workflow. When publishing a component to the next stage along the delivery pipeline, the entire component must be versioned as part of the delivery.

Typically, three versioning levels will be needed for components. Major versions reflect changes that break compatibility. Minor changes will usually imply an extension in which something is added but downward compatibility is preserved. Revision changes will usually imply purely internal changes with no outside visibility (e.g. a bug fix).

Correct versioning of components is important in ensuring that the results of an integration are reproducible. Furthermore, it can be an important factor in doing formal verification prior to build and test to ensure that the chosen constellation of components can work together.

Deployment Principles

Guideline 11: The underlying application framework, with its communication and component infrastructure, defines the way in which threads/tasks are used in the system

I claim that the distribution of functionality across tasks/threads, processes, domains and nodes of a system is an aspect of deployment and belongs in the responsibility of the software system architect. This is not something that the individual developer should be deciding on an ad-hoc basis. If this claim is accepted, the easiest way to ensure this is to give this responsibility wholly to the underlying infrastructure.

A tooling is then needed which will allow the system architect to easily and flexibly specify processes and threads. This tooling must then allow the deployment of components into these threads and processes. The output from this tooling must then be used during the build process to create precisely the binary images that were specified. This is the best protection against architecture erosion.

Guideline 12: Concurrency issues shall be handled within the framework and not within the source code of individual components

Concurrency issues are non-trivial and can have far-reaching consequences. In complex systems standardization of the way in which concurrency issues are handled is an important factor in ensuring that different components will play together correctly. The best way to ensure standardization is to embed the concurrency solutions in the infrastructure/framework so that the developer never needs to worry about these problems.

The greatest danger when individual developers start creating their own solutions is that everyone will have their own favorite solution. When different solutions are mixed, the resulting chaos is ensured.

Guideline 13: Subsystems must share the nature and some of the properties of components

When looking at any larger package of functionality, it is quickly obvious that this is a composition of smaller blocks. Let us allow components to be small. However, let us also allow components to be assembled into larger collections to form subsystems. Such a subsystem can then be seen to have many of the same properties as a simple component. It will import interfaces for services that it requires, it will export interfaces for services that it provides, and it will hide internal interfaces from the outside world.

Such subsystems can be defined to provide a precisely tailored subset of available functionality for a specific customer project. It is clearly associated with the next step upward in the architectural hierarchy. Subsystem definitions can be nested within each other to allow additional levels of abstraction when needed. A subsystem can also be connected with a “partial deployment” to allow the specialist group to provide a known and tested deployment scheme to the system architect for integration in the project.

Testing Principles

Guideline 14: Testing is a first-order goal of development – it is at least as important as feature completion

Cutting down on testing to save development time is all too common as an “emergency measure” when project development is behind schedule. When it happens, this is the death knell for team morale and quality goals.

I am an optimist. I believe fundamentally that every developer wishes to be proud of the products that he delivers. I believe that the most important goal of management should be to make this possible and then get out of the way. Tools must be provided that make testing simple. Time must be allocated in the planning for test development. The assumption of personal responsibility by the developers must be encouraged. Once this is in place, correct testing will be a no-brainer.

Guideline 15: Every engineer should be responsible for testing his/her own work products before they are passed forward through the delivery chain

A commonly propagated strategy is to assign testing responsibility to a separate test team. This is justified with the assumption that the test team has a stronger motivation to find errors and will test more strictly. Although there is some truth to this viewpoint it has the consequence that the individual engineer is now free of the “burden” of testing. The delay between making changes and getting feedback from testing has the emotional result of reducing the feeling of personal responsibility. I consider this to be a dangerous outcome. My preference is to have the engineers engaged, assuming personal responsibility. Independent testing is needed, but should never replace the testing done by the developers themselves.

Guideline 16: Do integration and testing hierarchically

This sounds so simple that it would seem to be unnecessary. I wish that were true.

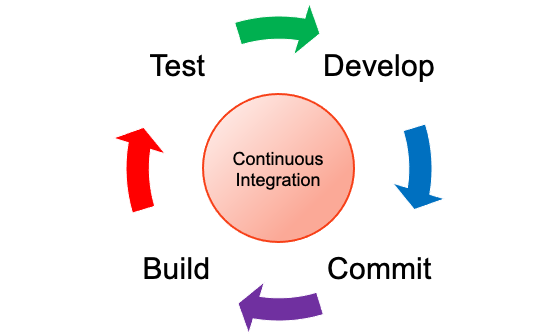

In many organizations the CI (Continuous Integration) paradigm is IMO perverted to “relieve” the developer from the burden of test responsibility. What can usually be observed is that the CI tooling essentially performs a big-bang integration with subsequent automated testing done on the full integration product. The only gain is that the integration is performed more frequently and that the resulting search for root causes, when the inevitable failures are seen, will not be quite as difficult.

There are numerous flaws in this way of thought. The key flaw is the confusion of the use of a tool (CI) with the thought of having a strategy. Tooling alone does not make a strategy!

A well thought out and executed integration and test strategy will start at the lowest level of development and will first verify individual functions. It will work its way up through the integration hierarchy, through classes, modules, components, subsystems and will ensure that sufficient testing is performed at every step. Of course, the pre-requisite for working in this fashion is to have both a clearly defined overall architecture in which this integration hierarchy is defined and to have an organization and delivery structure which fits to the architecture. Equally obviously, CI can offer a valuable contribution in automating and accelerating this activity.

Guideline 17: Never develop any functionality without also developing tests to verify this functionality

Ideally every function in your code will be subjected to unit testing which will verify both successful functionality and failure modes. The code for testing is a development product which should be saved along with the code itself (although perhaps segregated in a separate “test” directory) and which can be re-run automatically as part of the build process.

In the embedded environment there is a tendency to claim that testing is not possible without target hardware upon which tests can be run. The use of target hardware is indeed sometimes unavoidable. However, this is only true when direct HW interactions must be tested. Even most cases that require verification of real-time interactions can be subjected to some degree of verification in a pure SW environment running on a development work station.

Guideline 18: Never simply fix a bug. Start by extending the testing to demonstrate the bug

There are two major motivations behind this principle. First, the fact that the bug can be demonstrated in the testing makes it possible for the developer to provide proof that the bug is truly fixed. Secondly, if tests are maintained along with the source code and re-used, this makes it trivial to guard against this bug coming back in the future.

Guideline 19: Don’t be ideological! Accept that exceptions to these testing guidelines will sometimes be needed

All of these principles are guidelines, none of them are “natural laws”. Situations will arise in which the effort required to create an adequate test simply isn’t worthwhile. A typical example is when a statistically improbable timing interaction or hardware malfunction is the root cause. The number of attempts that must be repeated to reproduce the error a single time might well be astronomically high. Another example is when the emulation of the surrounding environment for a component or subsystem would require too much time and effort. An additional aspect to consider is that the tests themselves can be flawed. If a test is too complex it is more likely to contain undiscovered errors. Know when to stop!

[1] An imported interface is one for which this component plays a “client” role.

[2] An exported interface is one for which this component plays the role of service provider.