I have a strong love/hate relationship with continuous integration. On the one hand, it is a valuable component of any team development effort. On the other hand, it is rarely done in such a fashion that it can actually deliver on its promise. This leaves me with the dilemma that I love what it could be and despair of what it all too often is.

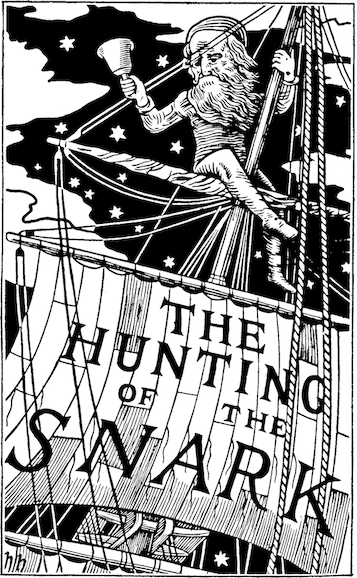

Part of my dilemma is based on my experience that many organizations present continuous integration as an integration strategy. This is wrong. A strategy must identify purposes and goals, establish the approach, establish criteria for evaluation of strategic options and, finally, identify constraints and risks. All continuous integration alone does is provide an approach. But an approach of what?

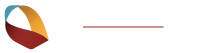

We all have had experience with big-bang integrations. Everyone knows that they don’t work well and are extremely wasteful. Nevertheless, organizations still exist that do things this way. But what does this have to do with continuous integration? One of the most common implementations of continuous integration involves coupling a complete rebuild of a software system with the code control system. The idea is that every time something is changed in the code control system a build will be started. Ideally, quality checking and functional testing will be part of the overall automation. The result is that a minimal quality check of the changed software is available soon after the change has been submitted. Since this checking is done automatically, the only expense is to set up the original automation and have a server upon which it runs. Then you need only ensure that the results are monitored and (in case of error), analyzed and acted upon. The result is a continuous integration approach which works by performing a rapid sequence of big-bang integrations.

The idea is not bad, the goals are admirable. The weakness is in the promises that are being made and the omission of the challenges that must be met.

The first false promise is already visible in the name which is commonly used. Continuous integration is not done continuously. A build is triggered in response to a discrete external event, when new code is submitted to the code control system. This is and remains discrete and not continuous. Although I freely acknowledge that this is a pedantic argument, this discrete, event-driven behaviour has important consequences.

Not every new submission will result in a build. Although every new submission may trigger a build, not every build will actually be performed. The reality is that many systems require a significant period of time to be built and developer teams are sometimes quite large. This means build triggers can be expected to arrive faster than they can be processed. The result is, that in most cases several submissions are being integrated together. The alternative is to have a sufficiently large number of build servers so that every change can trigger its own build. Do we really expect to scale the number of build servers with the number of developers and the duration of the build process? All of a sudden CI doesn’t appear so easy and inexpensive anymore.

Not every build will make sense. One easy example is an interface change. It is normal for an interface change to result in changes to multiple components. It is rarely possible and sensible to submit all these changes in a single change package. However, if these changes are not integrated together, the resulting builds will fail. The automated continuous integration process rarely intelligent enough to handle this case.

As was already stated above, in any large system big-bang integration will work poorly. Instead a cascade of structured integrations should be preferred. A local code change will affect a component, which should be integrated and tested with the changed code. The component in turn is embedded as part of a larger component and/or subsystem which must also be tested after integration of the changed component. This cascade of integrations may continue across several stages before it reaches a point at which the resulting subsystem is visible as a top-level element of the system architecture and should be integrated into the full system. Every step in this cascade must succeed before continuing up the chain to the next integration. Such a structured, cascaded approach to integration is rare because it requires detailed knowledge of software dependencies and structure. The software architecture only rarely captures this level of detail. Unfortunately, without this level of detail it is impossible to develop an appropriate integration strategy, let alone to implement such a strategy using the tool of continuous integration.

My final complaint against continuous integration is the fact that this is often used to delegate responsibility to an independent integration and test group. My experience indicates that developers produce significantly better quality when they feel personally responsible. This is why I find efforts to off-load testing to a separate group misguided. Yes, this does ensure greater independence. It also separates the testing from the people who have the best understanding of the software and it absolves the developers from the responsibility to test their code prior to delivery. An independent test instance is necessary and has a role to play. But it should not be used to “take away the burden of testing” from the developer.

IMHO it is essential that each developer be able to perform a test-integration of his local code changes, running through the entire cascade of integration and testing. It must be possible to do this against the current head revision of the system or against a desired labeled (baselined) version. Only when the developer can provide proof that his changes are acceptable should they be made visible to other developers and be included in an automated continuous build system.

I have heard claims made that an average developer will inadvertently submit an average of one show-stopper flaw annually. In larger development teams this highlights the need to identify and repair such show-stopper flaws as early as possible. One possible mitigation for this problem to make individual developers personally responsible for the quality of their work products and to give them the tools to do the required verification. This will enormously slow the frequency of show-stoppers. The implementation of a cascaded integration strategy using continuous integration tooling will ensure that remaining problems are quickly spotted. The combination can result in an enormous overall leap forwards in quality and productivity.